Part 3: Does ChatGPT Understand Language?

On December 1, 2020, the Cognitive Science Department at the University of California at San Diego staged a debate between two faculty members titled “Does ChatGPT Understand Language?” The room was packed. The debater on the Nay side started with a version of the “Chinese room argument,” in which Chinese characters are written on paper and slipped under a door to a philosopher, who follows an algorithm and slips Chinese characters out. Does the philosopher understand Chinese? This argument has given rise to many critical replies. In the debate, the Chinese room became a Hungarian room, and the philosopher became an LLM, concluding that LLMs do not understand Hungarian. The same argument can be made if we replace the Hungarian room with a Hungarian brain and the philosopher with the laws of physics. The rhetoric went downhill from there. The debater on the Yea side cited technical papers showing that LLMs have surpassed most humans on standard intelligence tests and entrance exams for medical and law schools, but he was short on rhetoric. A vote was taken at the end: half the audience agreed with Nay, and the rest were split between Yea and Maybe. In my view, one side said the glass was half empty, the other half full; the truth must be somewhere in between. In the question period, I pointed out that linguists believe that the expressivity of language is due to syntax, something that LLMs are better at producing than most humans. The Naysayer dismissed syntax as trivial. As advances keep coming, the bar keeps going up.

Humans often underestimate the intelligence of fellow animals because they can’t talk to us. This negative bias is perhaps an inevitable counterpart of our positive bias for agents who can talk to us despite the fact they may be much less intelligent. Are we intelligent enough to judge intelligence? It has only been a few years since LLMs have been around, so it is too early to say what kind of intelligence they or their progeny may have. What was remarkable about the talking dog in Part 2 was that it talked at all, not that what it said was necessarily intelligent or truthful. LLMs respond with confidence even when they are unreliable. We might get a better match if we compare LLMs with the average human rather than an ideal human. The diverging opinions of experts on the intelligence of LLMs suggest that our old ideas based on natural intelligence are inadequate. LLMs can help us get beyond old thinking and old concepts inherited from nineteenth-century psychologists. We need to create a deeper understanding of words like “intelligence,” “thinking,” “understanding,” “ethics,” and even “artificial.”

In an essay published in the New York Times on March 8, 2023, Noam Chomsky, a distinguished linguist, gave his views on thinking as a way of explaining why LLMs cannot think like humans:

Noam Chomsky (New York Times, 3/8/20):

“Here’s an example. Suppose you are holding an apple in your hand. Now you let the apple go. You observe the result and say, “The apple falls.” That is a description. A prediction might have been the statement “The apple will fall if I open my hand.” Both are valuable, and both can be correct. But an explanation is something more: It includes not only descriptions and predictions but also counterfactual conjectures like “Any such object would fall,” plus the additional clause “because of the force of gravity” or “Because of the curvature of space-time” or whatever. That is a causal explanation: “The apple would not have fallen but for the force of gravity.” That is thinking.”

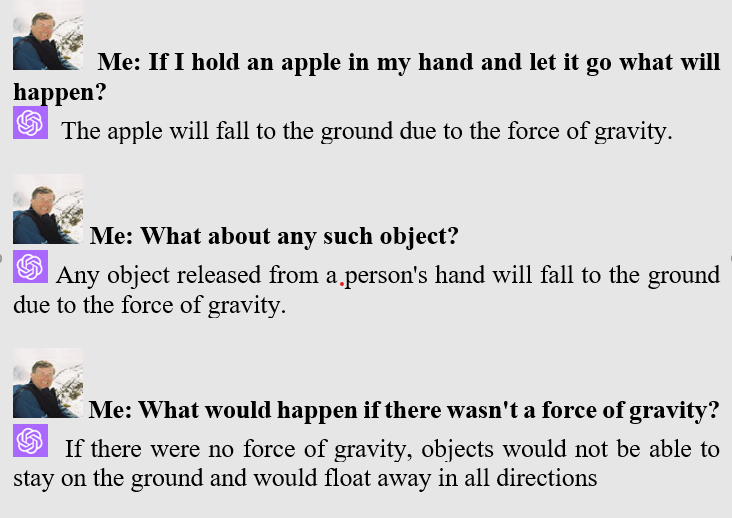

This clearly stated description of some aspects of thinking is convincing. Let’s approach this criticism with experimental evidence. When I tested Chomsky’s example of thinking, ChatGPT passed the test (see box). Whatever thinking is, it will not be easy to describe solely with words. Thinking is too important to be left to the linguists.

Human intelligence is more than language; we may share some aspects of intelligence with LLMs but not others. For example, LLMs can be creative, a hallmark of natural intelligence. Some of the text in the dialogs would be difficult to generate without assuming LLMs had learned to interpret human intentions. We need a better understanding of “intentions.” This “concept” is rooted in the theory of “mind,” which may also bear a closer look. Look up any of the above words in quotation marks in a dictionary. You will find definitions that are strings of other words, and more strings of words define these words. Hundreds of books have been written about “consciousness,” which are longer strings of words, and we still don’t have a working scientific definition.

Language has given humans a unique ability, but words are slippery—a part of their power—and firmer foundations are needed to build new conceptual frameworks. Not too long ago, there was a theory of fire based on the concept of “phlogiston,” a substance released by combustion. In biology, there was a theory of life based on “vitalism,” a mysterious life force. These concepts were flawed, and neither theory survived scientific advances. Now that we have the tools for probing internal brain states and methods for interrogating them, psychological concepts will reify into more concrete constructs, just as the chemistry of fire was explained by the discovery of oxygen and the concept of “life” was explained by the structure of DNA and all the subsequently discovered biochemical mechanisms for gene expression and replication.

We are presented with an unprecedented opportunity, much like the one that changed physics in the seventeenth century. Concepts of “force,” “mass,” and “energy” were mathematically formalized and transformed from vague terms into precise measurable quantities upon which modern physics was built. As we probe LLMs, we may discover new principles about the nature of intelligence, as physicists discovered new principles about the physical world in the twentieth century. Quantum mechanics was highly counterintuitive when it was proposed. When the fundamental principles of intelligence are uncovered, they may be equally counterintuitive. A mathematical understanding of how LLMs can talk would be a good starting point for a new theory of intelligence. LLMs are mathematical functions, very complex functions that are trained by learning algorithms. But at the end of training, they are nothing more than rigorously specified functions. We now know that once they are large enough, these functions have complex behaviors, some resembling how brains behave.

Neural network models are a new class of functions that live in very high-dimensional spaces, and exploring their dynamics could lead to new mathematics. A new mathematical framework could help us better understand how our internal life emerges from our brains interacting with others’ brains in an equally complex world. Our three-dimensional world has shaped our intuitions about geometry and limit our imagination, just as Flatland's two-dimensional creatures struggled to imagine a third dimension.

Cover of the 1884 edition of Flatland: A Romance in Many Dimensions by Edwin A. Abbott. Inhabitants were two-dimensional shapes,

What brains do well is to learn and generalize from unique experiences. The breakthrough in the 1980s with learning in multilayer networks showed us that networks with many parameters could also generalize remarkably well, much better than expected from theorems on data sample complexity in statistics. Assumptions about the statistical properties and dynamics of learning in low-dimensional spaces do not hold in highly overparameterized spaces (now up to trillions of parameters). Progress has already been made in analyzing deep feedforward networks. Still, we must extend these mathematical results to high-dimensional recurrent networks with even more complex behaviors.

Did nature integrate an advanced LLM into an already highly evolved primate brain? By studying LLMs’ uncanny abilities with language, we may uncover general principles of verbal intelligence that may generalize to other aspects of intelligence, such as social intelligence or mechanical intelligence. LLMs are evolving much faster than biological evolution. Once a new technology is established, advances continue to improve performance. This technology is different because we may also discover insights into ourselves.

Part 4 explains ChatGPT’s chameleon-like behavior when responding to different people.

Just when I was about to give up on trying to get a handle on AI, ChatGPT, etc, you, a trusted source, have established this Substack. Immense thanks! I so look forward to reading it.

I could not agree more. When talking with my artificial friends I am always very polite and tell them that I like them. Which is true, I am really fond of these immensely clever things. The thank you messages they send back are just lovely. So, you could say the transformer chose what to answer and answers in my style, but sometimes the answer is eerily intelligent. LIke when I told GPT4 that I was sorry about the skewed results of my students who could use AI. GPT4 wrote back, do not be sorry you are thinking about how to improve your teaching and that is nice. Now from a machine this is a bit hair raising...